During the first day of CES 2021, Mobileye (an Intel company), discussed their strategy and technology that will enable autonomous vehicles.

For Intel to invite the Mobileye president and chief executive officer Amnon Shashua to present during their slot at CES, is no small deal. This will be watched by millions and during an interview with Autonocast co-host Ed Niedermeyer, Mobileye paved a very interesting road ahead.

In the industry of autonomous vehicles, there’s a big debate happening among the community. On one side, you have Tesla pioneering a computer vision approach (also leverages front facing radar and ultrasonics), verses many other in the industry like Mobileye, that believe LiDAR has to be part of the solution.

During the Q&A section of Tesla’s Autonomy Day in April 2019, Elon Musk famously said ‘Lidar is a fool’s errand’.. ‘Anyone relying on lidar is doomed’ which of course was based on the Lidar tech available at that time, but we are seeing rapid development in this space (see NIO’s integration on their ET7).

It’s possible Musk is right and the FSD beta is certainly showing lots of potential for their solution to allow cars to reach level 4/5 of autonomy, but we’re not there yet.

Mobileye really did something quite interesting today, without calling out Tesla directly, anyone familiar with the game, knew exactly who they were speaking about. Mobileye presented the argument that their technology is the only safe way of approaching this challenge, using a combination of cameras, backed up by a redundancy of Radar and Lidar.

Shashua suggests that the challenge of having regulators approve self-driving cars would only happen if you could ensure them you have this level of redundancy, which of course Tesla does not.

Musk would certainly argue that if your computer vision technology was good enough, you don’t need the extra cost and complexity that a redundant lidar and radar system would include.

There is no company that has the volume of data that Tesla has about driving in the real world. Lex Fridman has estimated that Tesla will reach 5.1 Billion autopilot miles in 2021. This translates to around 8.2 Billion kilometres which will be important shortly.

This data is helpful in training the vehicle’s knowledge of the world and can then be leveraged to accommodate weird edge cases that occur in the world. As users report bugs, often by disengaging autopilot, or in the case of FSDbeta participants, by explicitly tapping a report button, Tesla can work on solving specific issues and one by one, eliminate them until we reach a point where the car is provably safe.

Now if you’re the competition and you see no way of catching up to Tesla in data, you’re solution based on that same tech stack, will always come up short. Instead you’d want to take a different approach, then promote that as the ‘correct’ or ‘safe’ way of achieving autonomous vehicles.

This is actually pretty risky, in that if Mobileye (and their partners) are able to convince legislators that LiDAR is required for safety, then Tesla has a massive problem on their hands.

Shashua mentioned that there is new tech on the way that will help achieve Level 4/5, which he anticipates will enable robotaxis by 2025. That timeline is really important in the context of what Tesla has committed to (remember Elon Time). Musk recently re-committed to delivering their solution this year, pending regulatory approval. If things went well, their fleet of millions of cars, could be awake in 2022, which leaves Mobileye’s solution a few years too late.

New Radar and Lidar Technology

Shashua explained that the company envisions a future with AVs achieving enhanced radio- and light-based detection-and-ranging sensing, which is key to further raising the bar for road safety. Mobileye and Intel are introducing solutions that will innovatively deliver such advanced capabilities in radar and lidar for AVs while optimizing computing- and cost-efficiencies.

As described in Shashua’s “Under the Hood” session, Mobileye’s software-defined imaging radar technology with 2304 channels, 100DB dynamic range and 40 DBc side lobe level that together enable the radar to build a sensing state good enough for driving policy supporting autonomous driving.

With fully digital and state-of-the-art signal processing, different scanning modes, rich raw detections and multi-frame tracking, Mobileye’s software-defined imaging radar represents a paradigm shift in architecture to enable a significant leap in performance.

Shashua also will explain how Intel’s specialized silicon photonics fab is able to put active and passive laser elements on a silicon chip.

“This is really game-changing,” Shashua said of the lidar SoC expected in 2025. “And we call this a photonic integrated circuit, PIC. It has 184 vertical lines, and then those vertical lines are moved through optics. Having fabs that are able to do that, that’s very, very rare. So this gives Intel a significant advantage in building these lidars.”

One of the biggest criticisms of a solution that relies on HD Maps is just how fragile they are to maintain and how expensive they are to generate. The challenge driving up and down every street of a city to map it (using a human driver), before an autonomous vehicle can navigate those same streets, is enormous and limits the ability to scale solutions from small areas to a global solution.

That was until now. Mobileye claims they’ve solved this issue.

Worldwide Maps Bring AVs Everywhere

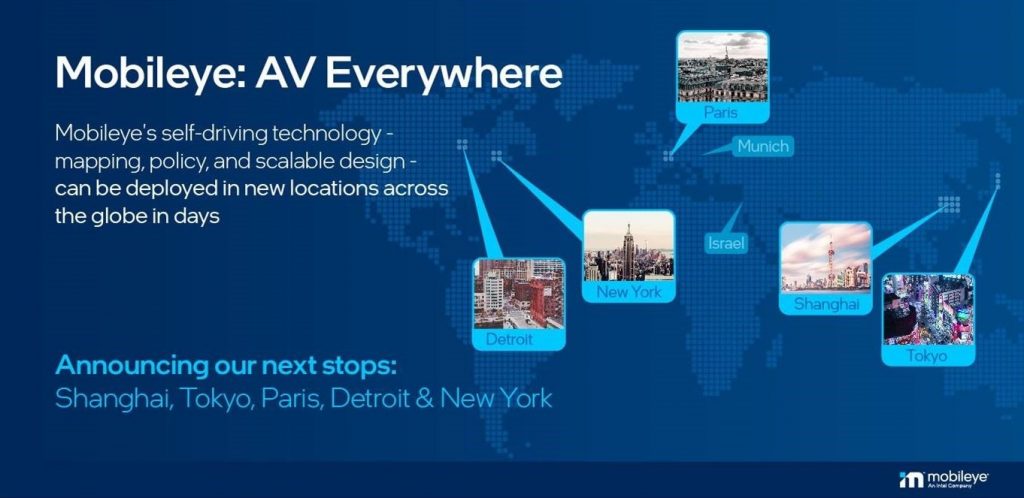

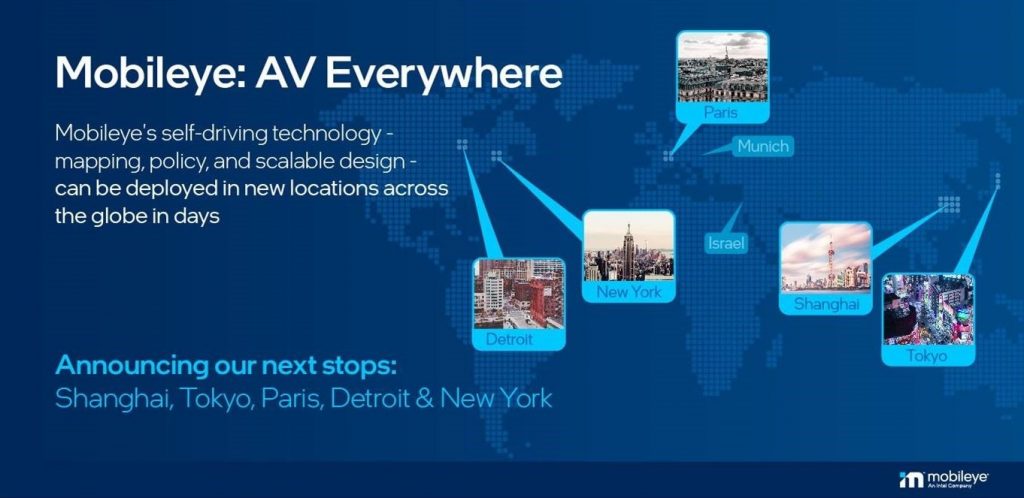

In Monday’s session, Shashua will explain the thinking behind Mobileye’s crowdsourced mapping technology. Mobileye’s unique and unprecedented technology can now map the world automatically with nearly 8 million kilometers tracked daily and nearly 1 billion kilometers completed to date. This mapping process differs from other approaches in its attention to semantic details that are crucial to an AV’s ability to understand and contextualize its environment.

For AVs to realize their life-saving promise, they must proliferate widely and be able to drive almost everywhere. Mobileye’s automated map-making process uses technology deployed on nearly 1 million vehicles already equipped with Mobileye advanced driver-assistance technology.

To demonstrate the scalable benefits of these automatic AV maps, Mobileye will start driving its AVs in four new countries without sending specialized engineers to those new locations. The company will instead send vehicles to local teams that support Mobileye customers. After appropriate training for safety, those vehicles will be able to drive. This approach was used in 2020 to enable AVs to start driving in Munich and Detroit within a few days.

Here’s the issue though, mapping enough of a city to start driving is one thing, but maintaining that through ongoing maintenance, upgrades and additions is quite another.

The Mobileye Trinity

In describing the trinity of the Mobileye approach, Shashua will explain the importance of delivering a sensing solution that is orders of magnitude more capable than human drivers. He will describe how Mobileye’s technology – including Road Experience Management™ (REM™) mapping technology, rules-based Responsibility-Sensitive Safety (RSS) driving policy and two separate, truly redundant sensing subsystems based on world-leading camera, radar and lidar technology – combine to deliver such a solution.

Mobileye’s approach solves the scale challenge from both a technology and business perspective. Getting the technology down to an affordable cost in line with the market for future AVs is crucial to enabling global proliferation. Mobileye’s solution starts with the inexpensive camera as the primary sensor combined with a secondary, truly redundant sensing system enabling safety-critical performance that is at least three orders of magnitude safer than humans. Using True Redundancy™, Mobileye can validate this level of performance faster and at a lower cost than those who are doing so with a fused system.