The world has been stunned by what’s happening with generative AI and as companies like OpenAI preview Sora for video, the world is excited with the possibilities. While Sora has still not released to the public, we are seeing lots of teaser clips released and the technology is revolutionary.

Many have wondered how this technology will impact video editors like Adobe and today we have our answer.

Adobe has previewed generative AI within Adobe Premiere Pro which will be released later this year. This will allow new capabilities in video creation and production workflows, delivering new creative possibilities that every pro editor needs to keep up with the high-speed pace of video production.

New generative AI tools coming to Premiere Pro this year enable users to streamline editing all videos, including adding or removing objects in a scene or extending an existing clip. These new editing workflows will be powered by a new video model that will join the family of Firefly models including Image, Vector, Design and Text Effects.

Adobe is continuing to develop Firefly AI models in the categories where it has deep domain expertise, such as imaging, video, audio, and 3D and will deeply integrate these models across Creative Cloud and Adobe Express.

Adobe also previewed its vision for bringing third-party generative AI models directly into Adobe applications like Premiere Pro. Creative Cloud has always had a rich partner and plugin ecosystem, and this evolution expands Premiere Pro as the most flexible, extensible professional video tool that fits any workflow. Adobe customers want choice and endless possibilities as they create and edit the next generation of entertainment and media.

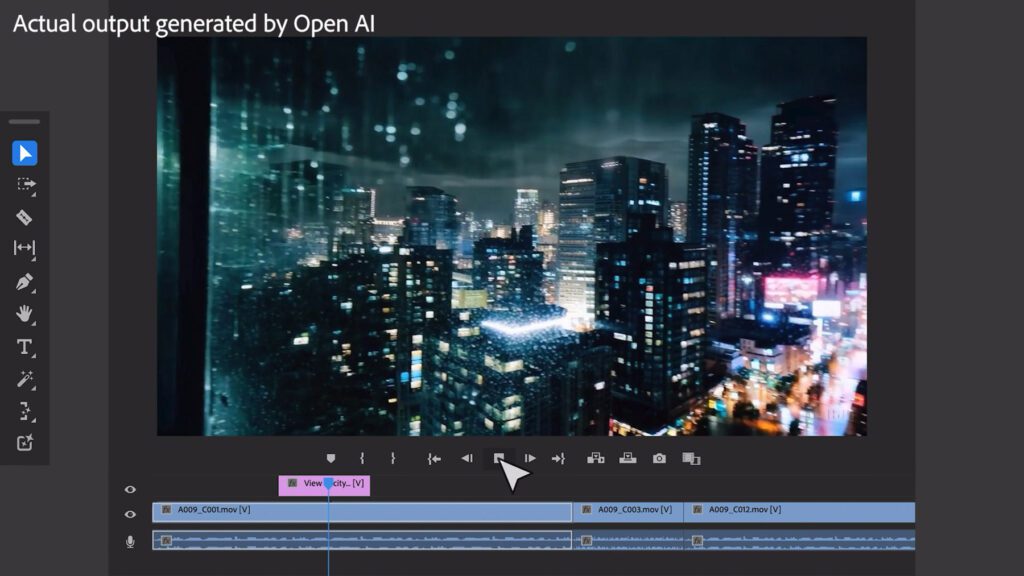

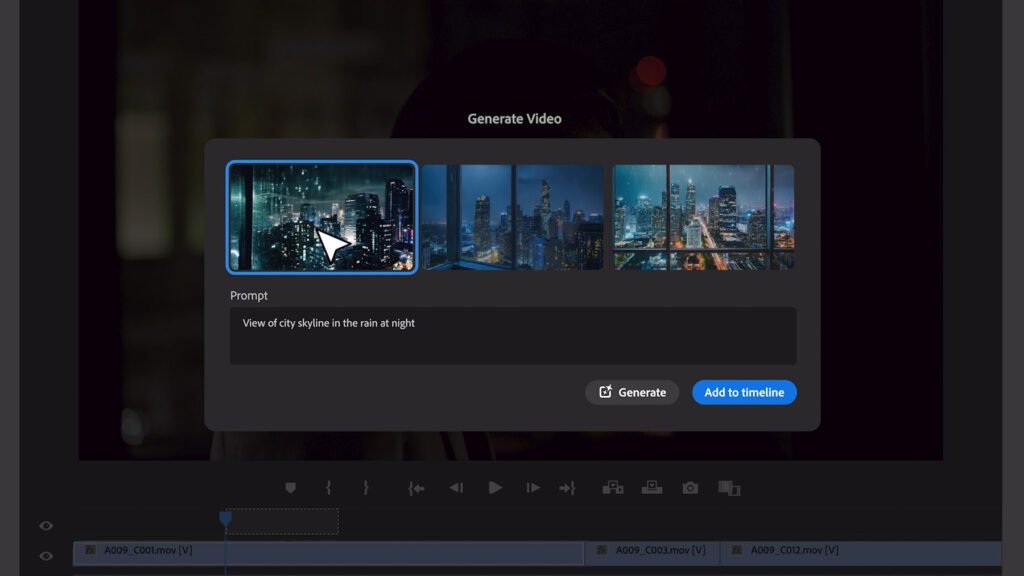

All of the workflows you see in this video are real pixels generated from Adobe’s upcoming Firefly model for video, plus workflows powered by Open AI’s Sora model, Runway’s Gen-2 model, and Pika Labs’ model.

Early explorations show how professional video editors could, in the future, leverage video generation models from OpenAI and Runway, integrated in Premiere Pro, to generate B-roll to edit into their project. It also shows how Pika Labs could be used with the Generative Extend tool to add a few seconds to the end of a shot.

By delivering new generative AI capabilities powered by Adobe Firefly and a variety of third-party models, Adobe is giving customers access to a range of new capabilities without having to leave the workflows they use every day in Premiere Pro.

Adobe is reimagining every step of video creation and production workflow to give creators new power and flexibility to realise their vision. By bringing generative AI innovations deep into core Premiere Pro workflows, we are solving real pain points that video editors experience every day, while giving them more space to focus on their craft.

Ashley Still, Senior Vice President, Creative Product Group at Adobe.

Adobe also announced the upcoming general availability of AI-powered audio workflows in Premiere Pro, including new fade handles, clip badges, dynamic waveforms, AI-based category tagging and more.

The future of Generative AI in Premiere Pro

Adobe showcased a technology preview of generative AI workflows coming to Premiere Pro later this year, powered by a new video model for Firefly. In addition, an early “sneak” shows how professional editors might leverage video generation models from Open AI and Runway in the future to generate B-roll, or how they might use Pika Labs with the Generative Extend tool to add a few seconds to the end of a shot.

Generative Extend

Seamlessly add frames to make clips longer, so it’s easier to perfectly time edits and add smooth transitions. This breakthrough technology solves a common problem professional editors run into every day, allowing them to create extra media for fine-tuning edits, to hold on a shot for an extra beat or to better cover a transition.

Object Addition & Removal

Simply select and track objects, then replace them. Remove unwanted items, change an actor’s wardrobe or quickly add set dressings such as a painting or photorealistic flowers on a desk.

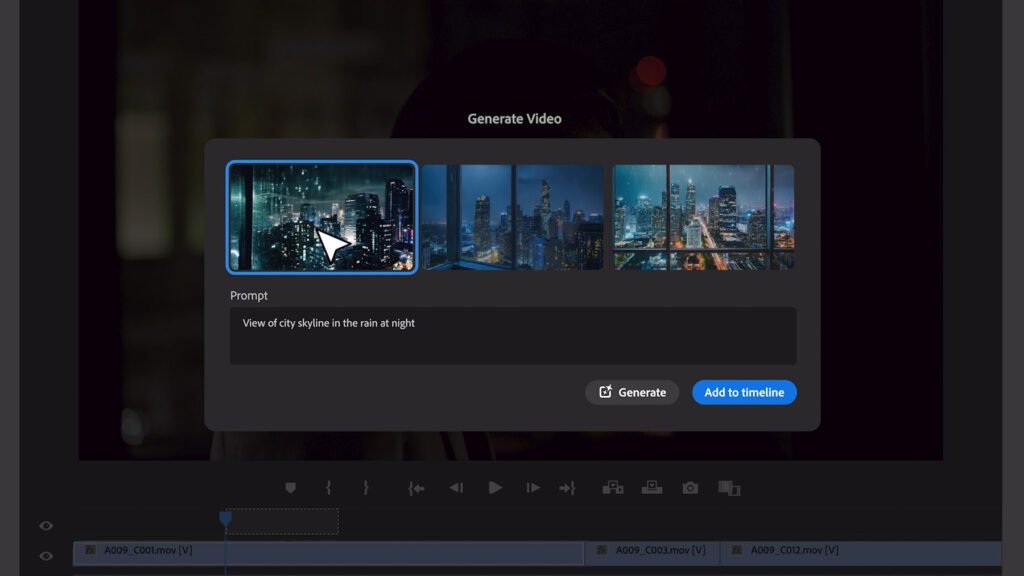

Text to Video

Generate entirely new footage directly within Premiere Pro. Simply type text into a prompt or upload reference images. These clips can be used to ideate and create storyboards, or to create B-roll for augmenting live-action footage.

While much of the early conversation about generative AI has focused on competition among companies to produce the “best” AI model, Adobe sees a future that’s much more diverse. Adobe’s decades of experience with AI shows that AI-generated content is most useful when it’s a natural part of what you do every day. For most Adobe customers, generative AI is just a starting point and source of inspiration to explore creative directions.

Adobe aims to provide industry-standard tools and seamless workflows that let users use any materials from any source across any platform to create at the speed of their imaginations. Whether that means Adobe Firefly or other specialised AI models, Adobe is working to make the integration process as seamless as possible from within Adobe applications.

Adobe has developed its own AI models with a commitment to responsible innovation and plans to apply what its learned to ensure that the integration of third-party models within its applications is consistent with the company’s safety standards.

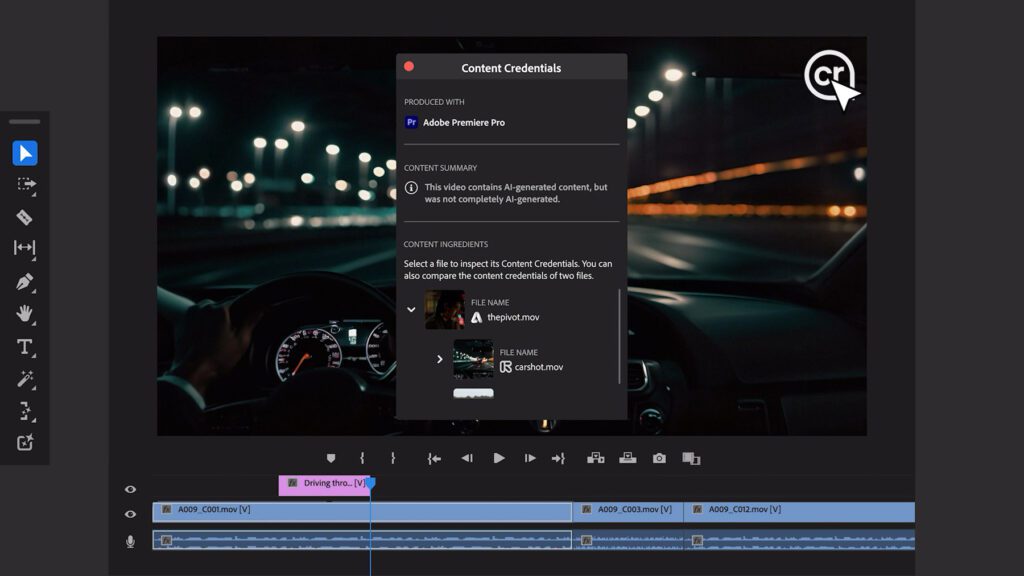

As one of the founders of the Content Authenticity Initiative, Adobe pledges to attach Content Credentials – free, open-source technology that serves as a nutrition label for online content – to assets produced within its applications so users can see how content was made and what AI models were used to generate the content created on Adobe platforms.

AI-Powered audio workflows coming to Premiere Pro

In addition to Adobe’s new generative AI video tools, new audio workflows in Premiere Pro will be generally available to customers in May, giving editors everything they need to precisely control and improve the quality of their sound. The latest features include:

Interactive fade handles

Editors can create custom audio transitions faster than ever by simply dragging clip handles to create audio fades.

New Essential Sound badge with audio category tagging

AI automatically tags audio clips as dialogue, music, sound effects or ambience, and adds a new icon so editors get one-click, instant access to the right controls for the job.

Effect badges

New visual indicators make it easy to see which clips have effects, quickly add new ones, and automatically open effect parameters right from the sequence.

Redesigned waveforms in the timeline

Waveforms intelligently resize as the track height changes on clips, while gorgeous new colours make sequences easier to read.

In addition, the AI-powered Enhance Speech tool – which instantly removes unwanted noise and improves poorly recorded dialogue – has been generally available since February.