Robotics one of the hottest industries right now and its something Australians need to stay on top of, to compete for the jobs of tomorrow – programming them.

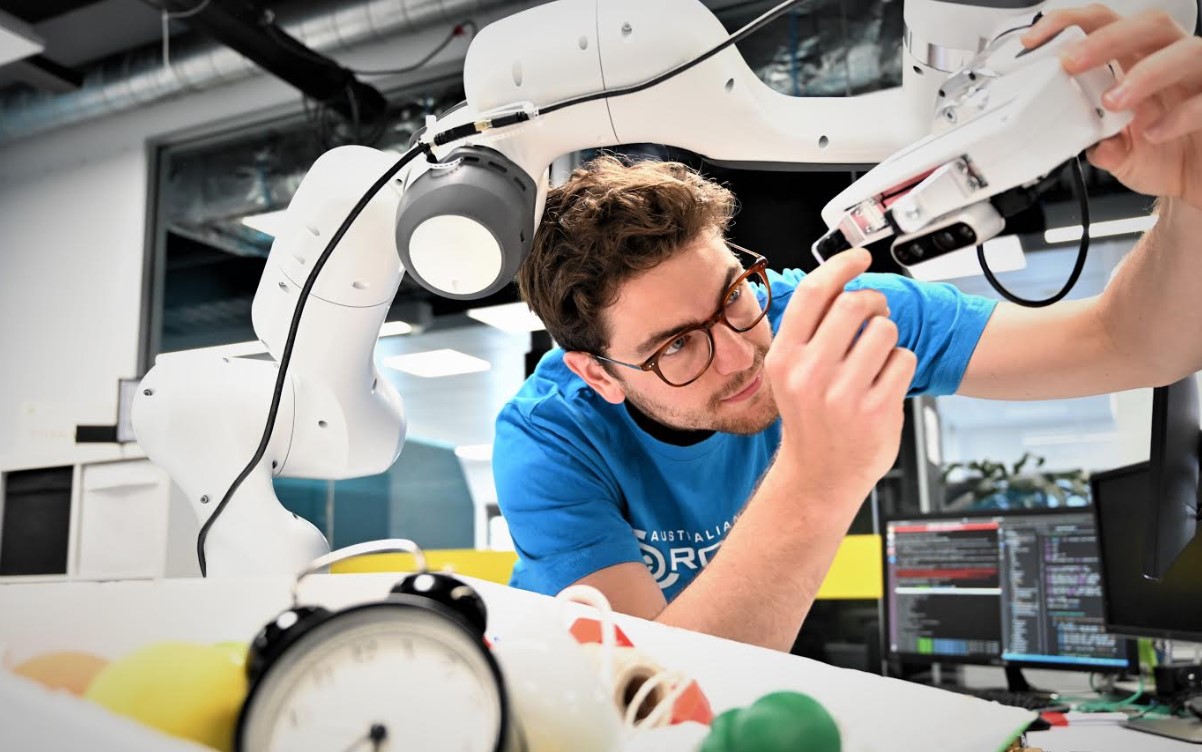

Doug Morrison is a 28yo Aussie who had the opportunity to spend 5 months at Amazon Robotics in Berlin (November 2018 – March 2019). The PHD researcher works in the Australian Centre for Robotic Vision’s QUT-based Lab.

Morrison was involved with a Robotic Vision project that left other global research standing still, showcasing how robots can be trained to handle the complex task of visually identifying objects, grasping them and removing clutter.

“The idea behind it is actually quite simple.

Our aim at the Centre is to create truly useful robots able to see and understand like humans. So, in this project, instead of a robot looking and thinking about how best to grasp objects from clutter while at a standstill, we decided to help it move and think at the same time.

A good analogy is how we ‘humans’ play games like Jenga or Pick Up Sticks. We don’t sit still, stare, think, and then close our eyes and blindly grasp at objects to win a game. We move and crane our heads around, looking for the easiest target to pick up from a pile.”

PhD Researcher Doug Morrison, who, in 2018, turned heads with his creation of an open-source GG-CNN network enabling robots to more accurately and quickly grasp moving objects in cluttered spaces.

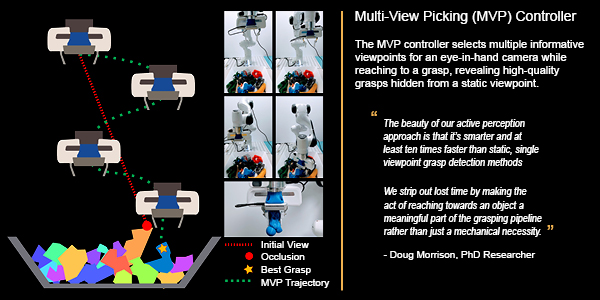

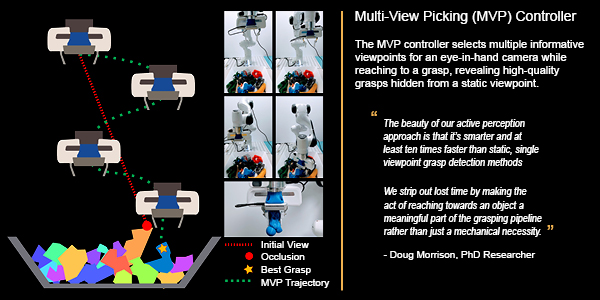

As outlined in a research paper presented at the 2019 International Conference on Robotics and Automation in Montreal, the project’s ‘active perception’ approach is the first in the world to focus on real-time grasping by stepping away from a static camera position or fixed data collecting routines.

It is also unique in the way it builds up a ‘map’ of grasps in a pile of objects, which continually updates as the robot moves. This real-time mapping predicts the quality and pose of grasps at every pixel in a depth image, all at a speed fast enough for closed-loop control at up to 30Hz.

“The beauty of our active perception approach is that it’s smarter and at least ten times faster than static, single viewpoint grasp detection methods.

“We strip out lost time by making the act of reaching towards an object a meaningful part of the grasping pipeline rather than just a mechanical necessity.

“Like humans, this allows the robot to change its mind on the go in order to select the best object to grasp and remove from a messy pile of others.”

PhD Researcher Doug Morrison

Doug has tested and validated his active perception approach at the Centre’s QUT-based Lab in ‘tidy-up’ trials using a robotic arm to remove 20 objects, one at a time, from a pile of clutter.

His approach achieved an 80% success rate when grasping in clutter; up 12% on traditional single viewpoint grasp detection methods.

The genius comes in his development of a Multi-View Picking (MVP) controller, which selects multiple informative viewpoints for an eye-in-hand camera while reaching to a grasp, revealing high-quality grasps hidden from a static viewpoint.

“Our approach directly uses entropy in the grasp pose estimation to influence control, which means that by looking at a pile of objects from multiple viewpoints on the move, a robot is able to reduce uncertainty caused by clutter and occlusions.

It also feeds into safety and efficiency by enabling a robot to know what it can and can’t grasp effectively. This is important in the real world, particularly if items are breakable, like glass or china tableware messily stacked in a washing-up tray with other household items.”

PhD Researcher Doug Morrison

Doug’s next step, as part of the Centre’s ‘Grasping with Intent’ project funded by a US$70,000 Amazon Research Award, moves from safe and effective grasping into the realm of meaningful vision-guided manipulation.

“In other words, we want a robot to not only grasp an object, but do something with it; basically, to usefully perform a task in the real world.

Take for example, setting a table, stacking a dishwasher or safely placing items on a shelf without them rolling or falling off.”

PhD Researcher Doug Morrison

Doug also has his sights set on fast-tracking how a robot actually learns to grasp physical objects. Instead of using ‘human’ household items, he wants to create a truly challenging training data set of weird, adversarial shapes.

“It’s funny because some of the objects we’re looking to develop in simulation could better belong in a futuristic science fiction movie or alien world – and definitely not anything humans would use on planet Earth!”

PhD Researcher Doug Morrison

There is, however, method in this scientific madness. As Doug explains, training robots to grasp on ‘human’ items is not efficient or beneficial for a robot.

“At first glance a stack of human household items might look like a diverse data set, but most are pretty much the same. For example cups, jugs, flashlights and many other objects all have handles, which are grasped in the same way and do not demonstrate difference or diversity in a data set.

We’re exploring how to put evolutionary algorithms to work to create new, weird, diverse and different shapes that can be tested in simulation and also 3D printed.

A robot won’t get smarter by learning to grasp similar shapes. A crazy, out-of-this world data set of shapes will enable robots to quickly and efficiently grasp anything they encounter in the real world.”

PhD Researcher Doug Morrison

Researchers from the Australian Centre for Robotic Vision are this week (4-8 November) leading workshops, including a focus on autonomous object manipulation at the International Conference on Intelligent Robots and Systems (IROS 2019) in Macau (4-8 November).

In another hot topic on the conference program, they will delve into why robots, like humans, can suffer from overconfidence in a workshop on the importance of uncertainty for deep learning in robotics including the Centre’s creation of a world-first Robotic Vision Challenge to help robots sidestep the pitfalls of overconfidence.