During Tesla’s 2022 Annual Shareholder meeting this week, the company revealed a new image to promote the upcoming AI DAY: PART II. The event scheduled on September 30th, will build on last year’s AI Day event where the TeslaBot was first revealed.

The teaser image showcases the hands of what we expect is the TeslaBot prototype and provides a lot of insight into Tesla’s progress on the humanoid robot.

Before we dive into each one of the focus areas for this breakdown, I wanted to touch on my expectations for the TeslaBot demo. When Tesla first announced the TeslaBot, many left with strong doubts that Tesla could achieve anything remotely close to the statue they presented on stage and later at the CyberRodeo event in Texas.

Tesla has zero runs on the board when it comes to building humanoid robots, but the whole reason they’re getting in to this space is that they believe they have the necessary pieces of the puzzle to make it a reality. There have been humanoid robots before, but what we’re expecting from Tesla is one that actually resembles the human form, a dramatic step forward from the toys like Asimo from Honda, or Pepper by SoftBank Robotics. The TeslaBot should also be a massive step forward in design and functionality from what many consider the leader in this space, Boston Dynamics.

This new image provides real hope that Tesla is on a path to delivering the form factor they have shown previously and actually gives us a look at the inner workings of the arms, wrists and fingers. Sure, this doesn’t have consumer-friendly coverings, but the biggest question is not about Tesla’s capacity to produce hard plastic with a glossy white paint finish, but rather can they make a functional robot in the dimensions and design they showed on stage.

After seeing this image, my expectations of what we’ll see in the prototype about 8 weeks from now, is a working humanoid robot that can stand, walk, and even pickup, categorise and place objects based on the computer vision system. This may be fairly rudimentary at first, given it’s been just a year since we learned publicly of the TeslaBot, but I also expect this was in development for some time at Tesla before that date.

It seems unlikely Tesla would show an image like this, to get people excited for AI Day 2, if they didn’t already have the batteries talking to the micro-controllers, talking to the actuators which move the different appendages. The question is, are the movements that we’ll see from Bot pre-programmed, or actually already processing dynamic inputs and requests and able to respond accordingly?

What would be mind-blowing, would be to see a number of these bots working together to solve a task. This could be they identify that something is too heavy for a single bot and work together to move an object from point A to point B.

I would also be very impressed if a bot is executing a task, and then adapts dynamically to objects placed in its way (without falling over).

I would also love to hear details on the battery life. While they certainly won’t be up to optimising the hardware and software, these bots do need to run multiple hours between charges to be able to deliver on the promise of increased efficiency and run time than humans.

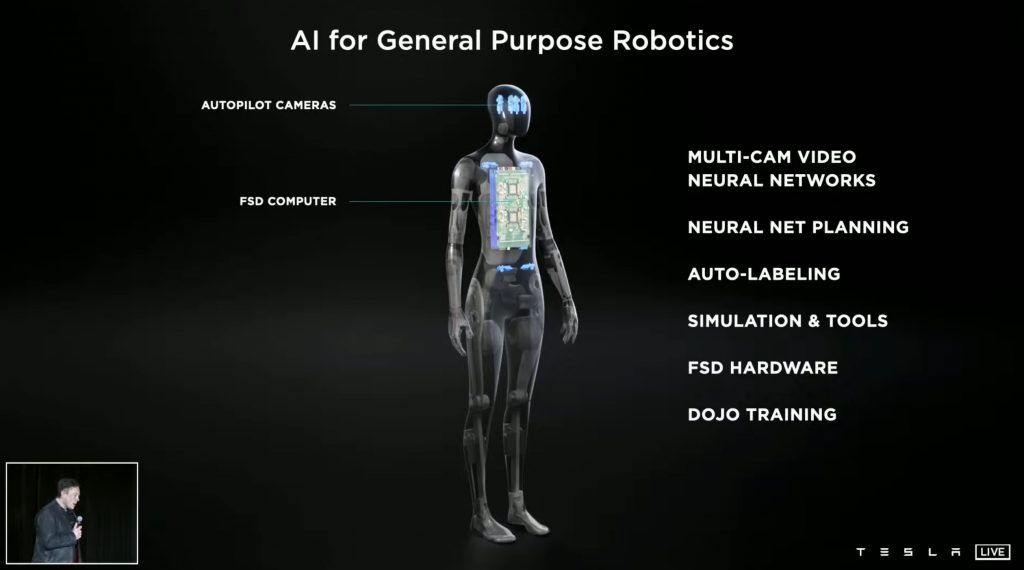

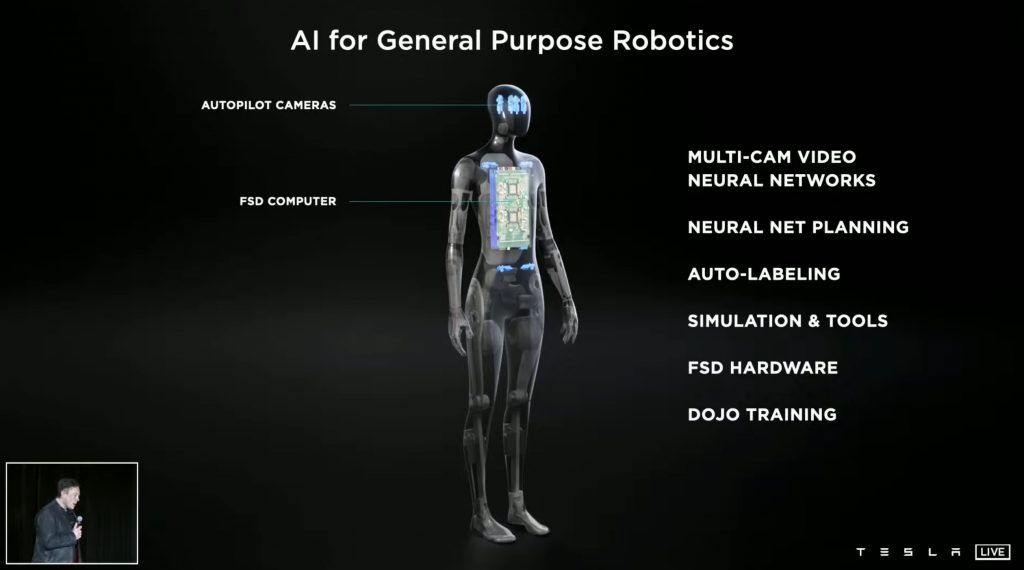

As a reminder, here’s a couple of images from the TeslaBot announcement.

Now for the breakdown of the picture.

Aluminium endoskeleton

For the TeslaBot to work, it has to have the structural strength, typically provided by our bone and muscle structure. In this case, TeslaBot’s endoskeleton looks to be made up of a series of pieces, articulated with joints, and powered by electronic actuators. We know from the Gigacastings that Tesla are able to leverage SpaceX material science to create new metals, but my expectation of what we’re seeing here is a type of aluminium, offers both strength and lightweight properties.

We know the weight of the bot will be around 56kg (125lbs), but we don’t know how many batteries it will require or their distribution. It would make sense that you house them in the torso and possibly legs to keep the lowest center of gravity possible.

It’s almost a shame to hide all this engineering away with an exterior, but inevitably this will be encased in a protective covering to give the bot its distinctive appearance.

Fingertip sensors

Hiding in the tips of the fingers, particularly visible in the thumb, looks to be a hole, which is likely to contain a sensor. This could work similar to the ultrasonic sensors in our cars, to determine how far you are away from an object. In the case of the Bot, hopefully, we see cm precision become mm precision, important for handling objects appropriately.

Communication wiring

Each component of the bot needs to work in a precise symphony of movements and to enable the extremities like fingers and fingertips it looks like Tesla has some serious cable management here. The two joints of the fingers and thumbs appear to have wiring that wraps around the actuator and I suspect, connects to the sensors in the fingertip, while also communicating to each actuator to inform it of the rotation required.

Bi-directional thumbs

These hinge-looking thumb joints are really complex, but when you consider the Bot would be far more useful if the thumb could rotate on two axis (like our own), rather than simply an open-close mechanism, this would really restrict the ability to position the thumb and manipulate objects.

Gamers may think of this as being able to place your finger and remove it from the thumbstick of a controller, but if you want to rotate the thumbstick, you need sideways movement as well.

Having the capacity to move in almost every direction with almost every joint sounds really beneficial, but it will also consume more battery life, so it’ll be down to Tesla’s software optimisation to make lean use of these movements. Engineers are likely to have to battle between making the robot move in a human-like way, versus delivering potential power savings and extended battery life.

If the TeslaBot looks human, but then walks and moves very much like a machine, it’ll be really disappointing and you’d then have to ask the question, why bother making it look like a human in the first place?

If Tesla is able to ramp their more power-dense batteries, the 4680s and use them in the Bot, then they may have the energy capacity available where this trade-off is no longer a decision. This raises the question of the timeline for TeslaBot and that’s something I hope we learn a lot more about once we’ve seen the prototype. Based on the progress today and functionality showed, it’ll be pretty clear if this is a year away, or 5 years away from being a reality.

Wrist hinges

The hinges on the wrist appear strong and robust, likely required to support the weight of the wrist and the 20kgs (45lbs) carrying capacity. On first look, these appear a regular cylinder through the mounting point and capped off with nuts on either end.

If you look closely, the side facing the camera features a black finish, with a hole in the center and there’s no regular hexagonal nuts to wrench on. There also appears to be a cut out in the grey endplate and perhaps this is weight saving, but may actually contain rotational limits, similar to a steering lock, this would mean the actuators could never over-rotate the joint.

Microcontroller

On the top of each arm, there appears to be a box. I assume this is not a local battery pack, given the weight at this point of the arm would be difficult to deal with. Instead, I believe these could be microcontrollers that contain manage the instructions from the brain (FSD computer) and may even receive these wirelessly. Given the side we see is polished aluminium, it would need some kind of break to allow wireless signals to flow between the components.

Not having wires between the main limbs that route all the way back to the HW3/4 computer would remove a lot of wiring weight and complexity. This does assume Tesla is able to worth through any interference and security issues of using a wireless protocol. This has been discussed as a future state for cars, so could be a great opportunity to explore an implementation of that system.

The only issue with this theory is the power puzzle. Even if you manage to communicate wirelessly, you’ll still need to power the actuators in the human-level hands, elbows, shoulders and legs, so you’ll need wiring to support that.

When you compare this square box on the top of the wrist, with the prototype shown, it’s hard to imagine that all fitting under the external shroud, as the wrist is one of the smallest diameter parts of the body.

Another explanation is that this is also temporary, something larger than it will end up in production, simply as a result of us taking a look early. I actually expect the TeslaBot shown on stage could be slightly bigger (perhaps 5%) to accommodate for the fact the miniaturisation process is unlikely to have been done at this stage – make it work first, then make it look good and last longer later would be my approach.

Leave a comment below and let us know your expectations of TeslaBot at AI Day 2 next month.

Fantastic analysis, much appreciated.

Your analysis is moot as the picture shared by Tesla has actually come from a stock photography site…

Some points re workings might be valid, but not based on this picture

Got a link to this stock image ?