Tesla’s Full Self-Driving V12 software update may share the same name as previous versions, but we should think about this release as being almost a completely new piece of software.

Architecturally this release is built differently.

Tesla started their autonomous efforts by using mostly human-crafted code which we can think of as a series of if this, then that loops. Over the years, an increasing amount of the software stack has been replaced with AI.

The 8 cameras mounted around the car sent their frames to the FSD computer for processing. The AI model would infer what objects ahead of the car were, a human, a traffic light etc. As time went by, Tesla evolved this to be sophisticated enough to understand trajectories of moving objects (i.e. people and other vehicles) and where there were issues, Tesla would use a team of humans to label the content, allowing the system to learn.

Over the years Tesla got increasingly more efficient at this, moving to auto-labelling for much of the grunt work, and ultimately they moved to video labelling, rather than using individual frames. At times, what the car sees at any frame in time isn’t enough to make the right driving decisions, as such, a video memory, allowed the system to accommodate omissions in data, like arrows painted on turning lanes that disappear under adjacent cars.

Every new release would iterate and improve on the last with the occasional regression, but as a trajectory, the software was getting better as AI ate more of the software stack.

In recent interactions, Tesla introduced new concepts like the occupancy network, which enabled the car to evaluate if there were solid objects that should be avoided, along with depth perception, all done using just cameras as inputs.

What’s different about FSD V12

With version 12, Tesla has replaced more than 300,000 lines of human-crafted code, instead relying on an AI model trained on a massive volume of driving clips. The driving examples are fed to the training compute cluster, of which Tesla has invested heavily.

Tesla’s training cluster consists of tens of thousands of Nvidia’s best GPUs, combined with their own Dojo hardware, an investment of hundreds of millions of dollars.

By feeding the system lots of examples of good driving, the AI model effectively learns how to drive. The magic of this approach is that it doesn’t require labelling of data, it learns by seeing but requires a ridiculous level of data and compute to process.

Tesla trains their model on video data collected from the millions of Tesla vehicles on the road, around the world. Having accumulated its driving skills, similar to humans, the model is pushed to the car via a software update.

This morning we learned the current build is 2023.38.10.

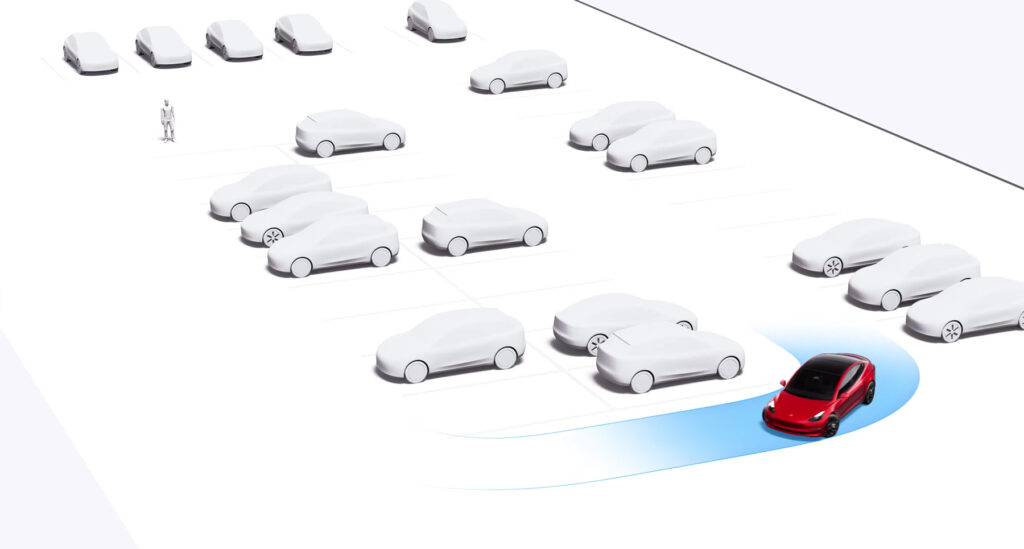

With the AI model in the car, video streams from the car’s cameras are fed to the FSD computer, which in turn drives the car by outputting the results via the steering wheel and pedals.

This video in, control out, is known in the AI world as end-to-end, something that’s been chased for years, but is only possible now.

We don’t yet know how well V12 will accommodate the many, many edge cases that we encounter while driving, outside what we saw in Elon Musk’s livestream a couple of months ago.

What we did see in that stream is that the car was driving itself, turning corners, stopping at intersections, changing lanes to follow a route and even parking, not because it was programmed, but because it had learned how to drive, think and respond to the environment around it.

In previous versions of the software, Tesla had opportunities to add logic to improve the shortcomings of the software, like crossing lane lines where there were double parked cars, but the path to resolve issues now changes with V12.

If there are issues that result in the driver disengaging, Tesla will now need to capture lots of examples of where that scenario is done correctly and update the model after another training run. A couple of important metrics we don’t know is how long it takes Tesla to run new training (days, weeks, months?) and how many of these issues we’ll see.

It is possible that we see other existing features like smart summon improve dramatically off the back of this new architecture and possibly the long-awaited reverse smart summon feature that would see you get out of the vehicle and let it go find a park. Given we saw a Tesla park itself during Musk’s livestream, it’s technically possible now, but we don’t know how well it works around other parking scenarios.

Global consequences of FSD V12?

Initially, Tesla will roll our V12 in the US and Canada, but this fundamental change to how the car learns and drives, will mean the international rollout of FSD just got accelerated. Tesla has sold FSD in many markets around the world for years and so far, has only delivered a portion of the overall functionality.

In Australia, we have access to the following items:

- Navigate on Autopilot

- Auto Lane Change

- Autopark

- Summon

- Smart Summon

We don’t yet have Autosteer on city streets. This is the most important feature, as it enables the car to take corners on city streets, navigate roundabouts and drive on unmarked roads.

Different markets have a surprising amount of variety, providing an opportunity to find shortcomings of the model, and this would again take more training to resolve. With a pipeline of video in, model and over-the-air software updates out, Tesla should have everything it needs to actually functionally complete FSD.

While many imagine that driving on the opposite side of the road RHD vs LHD, this is trivial to accommodate for, its strange rules like hook turns are fairly unique. Even road rules between seemingly similar parts of the world (ie. Australia vs the UK) feature significant differences. The great thing about end-to-end, is that road rules are learned through the training data, rather than prescribed to the model.

All going well, Australia and other similar countries, could see FSD Beta for the first time in early 2024. I expect others will come later either due to additional complexity in their driving environments, a lack of data, or regulatory restrictions.

The road to autonomy

As Tesla completes the list of features (importantly Autosteer on city streets), they will be able to recognise more of the FSD revenue and increase adoption rates, as more people genuinely find value in FSD on customer-owned vehicles.

The big win with delivering FSD will be establishing a robotaxi network, but this can only happen once Tesla achieves Level 4 autonomy. To tick this box, Tesla would need to gain regulatory approval in each market.

If successful in this, then Tesla open the door to the biggest revenue opportunity, taking a percentage of rideshare trips, while avoiding the cost of paying a driver. To achieve this, Tesla will need a concentration of FSD-enabled cars either Tesla or customer-owned in a given location to deliver a ride within an acceptable timeframe (similar to Uber, a few minutes). For this reason it is likely that Tesla releases ride-sharing on a city-by-city basis, despite the technology allowing for rides almost anywhere.

I’m worried about the RHD thing to be honest. It would be trivial when you’re using code, but the way FSD is programmed is very specific to the US and LHD in general. The fact there’s no code means you can’t just type “set {driveside} = $left” or something, because it’s baked in deep and codeless. The way it handles every sign, every car around it, every intersection, is baked deep for LHD.

They’ll need to rebuild the model from scratch using video footage from RHD countries almost exclusively. That means we’ll be driving based on a much smaller and weaker training model.

But I’m not suggesting reverting. It’s clear that end to end AI is the way to go. It’s just that it brings its own challenges. One solution might be to flip all the videos and then unflip signage before feeding it in.

But I think the long term solution is probably less about mass input mega-compute brute force training, which almost all AI is right now, and more of new methods such as the Neural Descriptor Fields being developed by MIT. Look up their article “An easier way to teach robots new skills”.